👋 Introduction

Welcome to the documentation of the ln-history project. In this guide we will build the infrastructure to perisist gossip messages of the Bitcoin Lightning network. I tried to make this setup process as simple as possible. If you struggle at some point or find an error, feel free to create an Github Issue here.

Project structure

Depending on your preferences, ln-history can be used in two different modes

| Mode | Description | Difficulty |

|---|---|---|

| direct request | Request the ln-history infrastructure and parse results locally | very easy |

| bulk import | Bulk insert your collected gossip messages into the database once | easy |

| real time | Insert your collected gossip messages in real time into the database | harder |

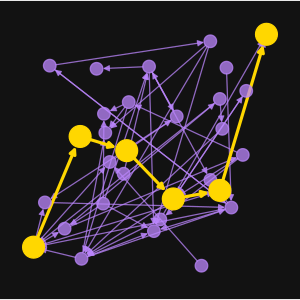

The following diagram shows you which steps you need to fulfill to setup the infrastructure. The platform supports running bulk-import and real-time simultaneously.

The core of the plaform is a postgres database called ln-history-database which gets setup first. After that you can decide if you want to setup the bulk-import or the real-time (or even both) modes.

_I recommend the easiest mode for starters to familiarize yourself, see direct_request, .

The bulk-import mode needs the insert-gossip docker container running which is able to read raw gossip messages, parse them and ultimatly insert them into the ln-history-database.

To get the real-time mode working you will need to setup a Bitcoin Core Lightning node with the Gossip Publisher running. This plugin publishes collected gossip messages via a Zero Message Queue to the gossip-syncer. This micro service utilizes a Valkey cache to check for duplicates and peform statistics about the collected gossip. The gossip-syncer then continues to filter the gossip messages and publishes them to a specified topic on a Kafka instance which acts as an event streaming platform. The gossip-post-processor subscribes to the topic and structures the gossip messages to insert them efficiently into the ln-history-database

After finish one (or both) ways, you might want to setup a backend to have an exposed API that queries your database. I created the docker container ln-history-api. It is a dotnet backend featuring an authorized REST API and with endpoints that run queries to do:

- Snapshot generation for a given timestamp

- Snapshot diff calculation for two given timestamps

- Gossip by

node_idfor a given timestamp - Gossip by

scidfor a given timestamp

Note that the format of the results is raw bytes. They need to get parsed by the client.

The lnhistoryclient is a python package that provides classes, functions and interfaces to easly parse the gossip (in raw bytes) results into more readable formats, featuring JSON and python networkx.

Architecture

Here you can see an visualization of the architecture

I do not have any gossip messages

You can still use this tool and do it yourself.

Dr. Christian Decker collected and uploaded gossip messages ranging between 01-2018 to 09-2023.

See this repository, which provides links to his gossip messages.

You can download this data for free.

With ln-history I provide the infrastructure to analyze the gossip messages. Maybe this is all you are looking for?

Important noticewarning

The Lightning Network - as a second layer technology - uses the Bitcoin blockchain. It is therefore necessary to have access to the Bitcoin blockchain.

If you've set up a Bitcoin Core node with txindex=1 enabled, you can run the btc-rpc-explorer service on your bitcoin node to browse and query blockchain data via the node's RPC interface.

Currently both modes real-time and bulk-import of the platform work by requesting a btc-rpc-explorer. If you are trying to insert thousands of channel_announcement messages at once (as the current implementation of the insert-gossip does), inserting channel_announcement messages take time (and bandwidth).

The bulk-import mode would very likely work better with the iterate bitcoin setup. I will start working on a solution to this in the near future, likely utilizing Iterate Bitcoin.

Prerequisits

The infrastructure relies on docker (and docker compose) trying to make it as platform independent as possible. Nonetheless, I highly recommend running it on a Linux machine (specifically Ubuntu), as that’s the only environment I’ve tested it on.

Docker installation

We follow the official documentation.

-

Setup up Docker's

aptrepository -

Install the (latest) Docker packages

ln-history@host:~₿ sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -

Verify that the installation is successful by running the hello-world image and check if you should see this result:

Hello from Docker! This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

- The Docker client contacted the Docker daemon.

- The Docker daemon pulled the "hello-world" image from the Docker Hub. (amd64)

- The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading.

- The Docker daemon streamed that output to the Docker client, which sent it to your terminal.

To try something more ambitious, you can run an Ubuntu container with: $ docker run -it ubuntu bash

Docker compose installation

We follow the official documentation

-

We download and install the Docker Compose standalone

ln-history@host:~₿ curl -SL https://github.com/docker/compose/releases/download/v2.39.1/docker-compose-linux-x86_64 -o /usr/local/bin/docker-compose -

We apply executable permissions to the standalone binary in the target path for the installation

ln-history@host:~₿ chmod +x /usr/local/bin/docker-compose

Folder structure

On your machine navigate to your desired location where the project will live. You might want to create a specific user for that.

Create useroptional

You can then add this user to the sudo group if needed:

Switch to the new user:

Folder creation

Each service in this project lives in its own isolated folder. This keeps configuration files, environment variables, and Docker setup neatly organized and modular.

The structure looks like this:

We will now create the project root folder ln-history where all the services will be created in.

Ready - Set - Go

Follow the steps in the same order as the sections appear in the navbar on the left hand side.

In case you want to understand the structure and format of Lightning Network gossip messages, please check out the second last page Gossip.

❇️ Lets jump into the database setup!